First Class Goto

Oort is an experimental programming language I have been working on, on and off (mostly off), since 2007. It is a statically typed, object-oriented, imperative language, where classes, functions and methods can be nested arbitrarily, and where functions and methods are full closures, ie., they can be stored in variables and returned from functions. The control structures are the usual ones: if, for, while, do, goto, etc.

It also has an unusual feature: goto labels are first class.

What does it mean for labels to be first class? It means two things: (1) they are lexically scoped so that they are visible from inside nested functions. This makes it possible to jump from any point in the program to any other location that is visible from that point, even if that location is in another function. And (2) labels can be used as values: They can be passed to and returned from functions and methods, and they can be stored in data structures.

As a simple example, consider a data structure with a “foreach” method that takes a callback function and calls it for every item in the data structure. In Oort this might look like this:

table: array[person_t]; table.foreach (fn (p: person_t) -> void { print p.name; print p.age; });

A note about syntax. In Oort, anonymous functions are defined like this:

fn (<arguments>) -> <return type> { ...; }

and variables and arguments are declared like this:

<name>: <type>

so the code above defines an anonymous function that prints the name and the age of person and passes that function to the foreach method of the table.

What if we want to stop the iteration? You could have the callback

return true to stop, or you could have it throw an

exception. However, both methods are a little clumsy: The first

because the return value might be useful for other purposes, the

second because stopping the iteration isn’t really an exceptional

situation.

With lexically scoped labels there is a direct solution – just use

goto to jump out of the callback:

table.foreach (fn (p: person_t) -> void { print p.name; print p.age; if (p.age > 50) goto done; }); @done:

Note what’s going on here: Once we find a person older than 50, we jump out of the anonymous callback and back into the enclosing function. The git tree has a running example.

Call/cc in terms of goto

In Scheme and some other languages there is a feature called call/cc, which is famous for being both powerful and mindbending. What it does is, it takes the concept of “where we are in the program” and packages it up as a function. This function, called the continuation, is then passed to another, user-defined, function. If the user-defined function calls the continuation, the program will resume from the point where call/cc was invoked. The mindbending part is that a continuation can be stored in data structures and called multiple times, which means the call/cc invocation can in effect return more than once.

Lexically scoped labels are at least as expressive as call/cc, because if you have them, you can write call/cc as a function:

call_cc (callback: fn (k: fn()->void)) -> void { callback (fn() -> void { goto current_continuation; }); @current_continuation: }

Let’s see what’s going on here. A function called call_cc() is defined:

call_cc (...) -> void { }

This function takes another function as argument:

callback: fn (...) -> void

And that function takes the continuation as an argument:

k: fn()->void

The body of call/cc calls the callback:

callback (...);

passing an anonymous function (the continuation):

fn() -> void { goto current_continuation; } @current_continuation:

that just jumps to the point where call_cc returns. So when callback

decides to invoke the continuation, execution will resume at the point

where call_cc was invoked. Since there is nothing stopping

callback from storing the continuation in a data structure or from

invoking it multiple times, we have the full call/cc semantics.

Cooperative thread system

One of the examples on the Wikipedia page about

call/cc

is a cooperative thread system. With the call_cc function above, we

could directly translate the Wikipedia code into Oort, but using the

second aspect of the first-class-ness of labels – that they can be

stored directly in data structures – makes it possible to write a

more straightforward version:

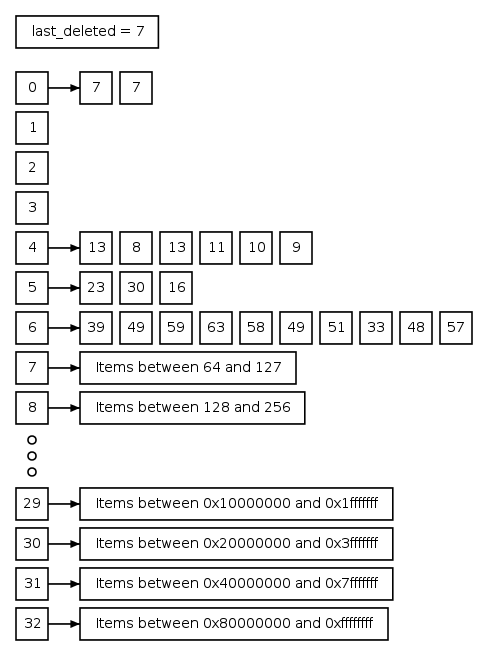

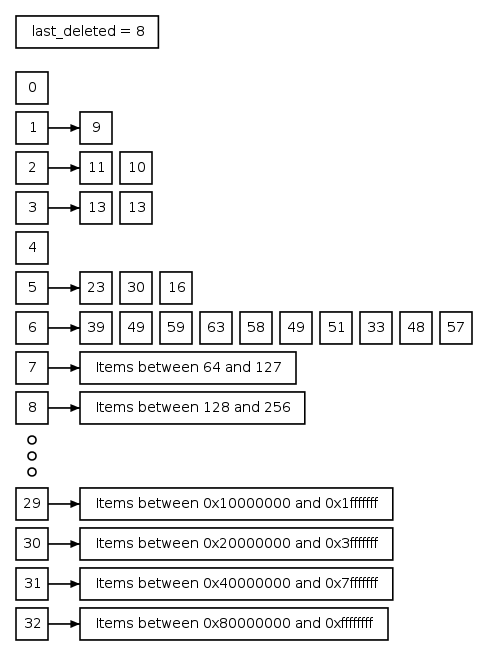

run_list: list[label] = new list[label](); thread_fork (child: fn() -> void) { run_list.append (me); child(); goto run_list.pop_head(); @me: } thread_yield() { run_list.append (me); goto run_list.pop_head (); @me: } thread_exit() { if (!run_list.is_empty()) goto run_list.pop_head(); else process_exit(); }

The run_list variable is a list of labels containing the current

positions of all the active threads. The keyword label in Oort is

simply a type specifier similar to string.

To create a new thread, thread_fork first saves the position of the

current thread on the list, and then it calls the child

function. Similarly, thread_yield yields to another thread by saving

the position of the current thread and jumping to the first label on

the list. Exiting a thread consists of jumping to the first thread if

there is one, and exiting the process if there isn’t.

The code above doesn’t actually run because the current Oort implementation doesn’t support genericity, but here is a somewhat uglier version that actually runs, while still demonstrating the principle.

Read more

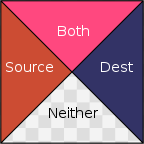

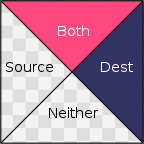

Let’s look at this in more detail. Please follow along

in the diagram to the right. First with probability

Let’s look at this in more detail. Please follow along

in the diagram to the right. First with probability